Multinomial Logistic Regression is a classification method that generalizes Logistic Regression to multiclass problems i.e. with more than two possible discrete outcomes. So, can we use the same algorithm for Digit Recognition which is also a classification problem with multiple outcomes?

Let’s give it a try!

I have practiced Decision Tree and Random Forest Algorithms for the Digit Recognition Problem in my previous posts. Here, I am using the Dataset from the Neural Network Exercise from the Andrew Ng Course of Machine Learning. I converted the .mat file to a .csv file. The steps are mentioned in another post here.

So, the first few steps are same as in my last 2 posts and thus copy pasted from before.

Let’s divide the whole process into the below steps:

1. Load Data

2. Divide Data into Training and Test Set

3. Exploratory Data Analysis

4. Create a Model

5. Prediction

1. Load Data:

I have used the Dataset from “machine-learning-ex4” from Andrew Ng ‘s Machine Learning Course. I converted the .mat files to a .csv files. Let’s load the data first and take a look.

Now, let’s see what we are doing in the above piece of code.

- Load 2 data files:We have 2 data files. The first data file has 5000 training examples, where each training example is a 20 * 20 (400) pixel grayscale image of a digit. Thus, each pixel is represented by a floating-point number indicating the grayscale intensity at that location. The 20 by 20 grid of pixels is “unrolled” into a 400-dimensional vector. The second data file is a 5000-dimensional vector y that contains labels for the training set.

- Merge the 2 Datafiles:I have loaded both the datasets and merged them into a single file. Let’s check the dimension of each file.

- Rename the “output” column: The output file column name is “V1”. Also, the pixel value dataset has a column named “V1”. So, I renamed the output file column to “Output” to avoid any confusion.

- Convert Output to a Factor column: If we check the class of the “Output” Column, it is “Integer”. Let’s Convert it to a Factor Column with 10 levels.

- Check if any column has Missing Value: I just wanted to check if there is any missing value. But it seems like there is not missing value and we are all set for the next step.

2. Divide Data into Training and Test Set:

At this step, we will divide the dataset into the Training and Test set. We will use 70% of the data for training our Model and the other 30% to test the accuracy of our Model.

3. Exploratory Data Analysis:

This is a fun and colorful part. Let’s plot!

It’s time to find out how our Training Set looks like.

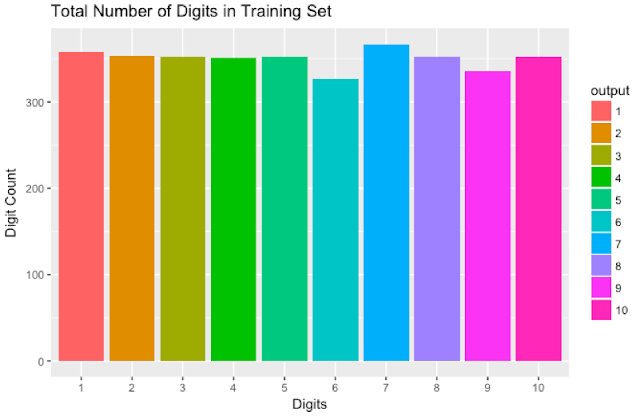

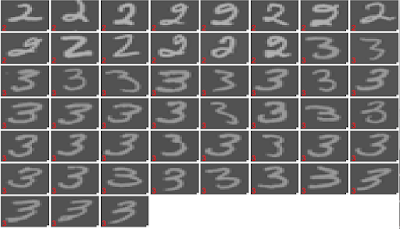

Hmm, all the digits are almost equally distributed in the Training Dataset. Now, we will see how the handwritten Digits in our training set looks like. We have created a function for the same and passed a few records randomly and below is the output.

4. Create a Model:

We will use multinom() from nnet library for this classification problem. Let’s build a Model!

It looks like the Model converged after a few iterations.

5. Prediction:

We will use the test dataset and this Multinomial Logistic Regression Model and check how accurate is the prediction.

Below is the Confusion Matrix.

The accuracy of this Model is 0.7986666667.

The 182nd record is also predicted correctly.

For a few random number tests, the prediction failed and for a few, it passed. Till now, the randomForest Model is the Winner!

Thank You!

Komal

thank you for the valuable information giving on data science it is very helpful.

Data Science Training in Hyderabad

Aditi Digital Solutions

good article about data science has given it is very nice thank you for sharing.

Data Science Training in Hyderabad